Stanford Report, April 26, 2001

BY DAWN LEVY

Faster than a speeding bullet. Able to leap photographic obstacles with a single computer chip. It's a camera. It's a chip. It's a camera-on-a-chip.

Thanks to the efforts of electrical engineering Professor Abbas El Gamal, psychology and electrical engineering Professor Brian Wandell and their students, it's getting harder to take a bad picture. Conventional digital cameras capture images with sensors and employ multiple chips to process, compress and store images. But the Stanford researchers have developed an innovative camera that uses a single chip and pixel-level processing to accomplish those feats. Their experimental camera-on-a-chip may spawn commercial still and video cameras with superpowers including perfect lighting in every pixel, blur-free imaging of moving objects, and improved stabilization and compression of video.

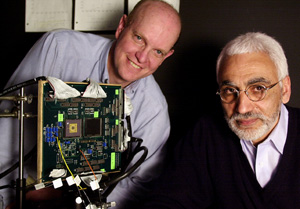

Professors Brian Wandell (left) and Abbas El Gamal with the camera-on-a-chip, which captures images at 10,000 frames per second, processes one billion pixels per second and sets a world speed record for continuous imaging. Centered on a test board, the chip is one-quarter the size of a postage stamp. photo: L.A. Cicero

"The vision is to be able to ultimately combine the sensing, readout, digitization, memory and processing all on the same chip," says El Gamal. "All of a sudden, you'd have a single-chip digital camera which you can stick in buttons, watches, cell phones, personal digital assistants and so on."

Most of today's digital cameras use charge-coupled device (CCD) sensors rather than the far less expensive complementary metal-oxide semiconductor (CMOS) chips used in most computing technologies. Light arriving at the CCD sensor is converted into a pixel charge array. The charge array is serially shifted out of the sensor and converted to a digital image using an analog-to-digital converter. The digital data are processed and compressed for storage and subsequent display.

Reading the data from a CCD is destructive. "At that point the charge within the pixel is gone," Wandell says. "It's been used in the conversion process, and there's no way to continue making measurements at that pixel. If you read the charge at the wrong moment, either too soon or too late, the picture will be underexposed or overexposed."

Another limitation of CCD sensors, El Gamal says, is designers cannot integrate the sensor with other devices on the same chip. Creating CMOS chips with special circuitry can solve both of these problems.

El Gamal and his students began their work on CMOS image sensors in 1993. Their research led to the establishment of Stanford`s Programmable Digital Camera Project to develop architecture and algorithms capable of capturing and processing images on one CMOS chip. In 1998, he, Wandell and James Gibbons, the Reid Weaver Dennis Professor of Electrical Engineering, brought a consortium of companies together to fund their research effort. Agilent, Canon, Hewlett-Packard and Eastman Kodak currently fund the project. Founding sponsors included Interval Research and Intel.

Designers of the Mars Polar Lander at NASA's Jet Propulsion Laboratory were the first to combine sensors and circuits on the same chip. They used CMOS chips, which could tolerate space radiation better than CCDs, and the first-generation camera-on-a-chip was born. It was called the active pixel sensor, or APS, and both its input and output were analog.

The Stanford project generated the second-generation camera-on-a-chip, which put an analog-to-digital converter in every pixel, right next to the photodetector for robust signal conversion. Called the digital pixel sensor, or DPS, it processed pixel input serially -- one bit at a time.

In 1999, one of El Gamal's former graduate students, Dave Yang, licensed DPS technology from Stanford's Office of Technology Licensing and founded Pixim, a digital imaging company that aims to embed the DPS chip in digital still and video cameras, toys, game consoles, mobile phones and more.

The need for speed

The second-generation camera-on-a-chip was relatively peppy at 60 frames per second. But the third generation left it in the dust, capturing images at 10,000 frames per second and processing one billion pixels per second. The Stanford chip breaks the speed limit of everyday video (about 30 frames per second) and sets a world speed record for continuous imaging.

What makes it so fast? It processes data in parallel, or simultaneously -- the chip manifestation of the adage "Many hands make light work."

"While you're processing the first image, you're capturing the second," El Gamal explains. "It's pipelining."

Besides being speedy, its processors are small. At a Feb. 5 meeting of the International Solid State Circuits Conference in San Francisco, El Gamal and graduate students Stuart Kleinfelder, Suk Hwan Lim and Xinqiao Liu presented their DPS design employing tiny transistors only 0.18 micron in size. Transistors on the APS chip are twice as big.

"It's the first 0.18-micron CMOS image sensor in the world," El Gamal says. With smaller transistors, chip architects can integrate more circuitry on a chip, increasing memory and complexity. This unprecedented small transistor size enabled the researchers to integrate digital memory into each pixel.

"You are converting an analog memory, which is very slow to read out, into a digital memory, which can be read extremely fast," El Gamal says. "That means that the digital pixel sensor can capture images very quickly."

The DPS can capture a blur-free image of a propeller moving at 2,200 revolutions per minute. High-speed input coupled with normal-speed output gives chips time to measure, re-measure, analyze and process information. Enhanced image analysis opens the door for new or improved research applications including motion tracking, pattern recognition, study of chemical reactions, interpretation of lighting changes, signal averaging and estimation of three-dimensional structures.

Photography is not a new tool in research. In 1872, Leland Stanford hired Eadweard Muybridge to conduct photographic experiments testing his idea that at one point in its gait, a horse has all four feet off the ground. That research led to the development of motion pictures.

But most people don't use cameras to advance the frontiers of science or industry. They just want to take a decent picture, and high-speed capture solves a huge problem: getting proper exposure throughout an image with a big range of shades between the darkest and brightest portions of the picture.

"It's very difficult to combine low-light parts of an image with high-light parts of an image in one image," El Gamal says. "That's one of the biggest challenges in photography. Film does a very good job of that. Digital cameras and video cameras don't do as well."

Images taken in bright environments need short film or pixel exposure times, and those taken in dim environments need long exposure times. With a single click, the camera-on-a-chip captures and measures the charges in the pixels repeatedly, at high speed. Its algorithm waits until the right moment for each individual pixel to assemble a final picture with perfect exposure throughout the image.

"What I consider the real breakthrough of this [DPS] chip is that it can do many very fast reads without destroying the data in the sensor," Wandell says.

Wandell, an expert in human vision and color perception, says working with cameras gives him ideas for hypotheses about how the brain processes images. He and students including Jeffrey DiCarlo and Peter Catrysse in electrical engineering and Feng Xiao in psychology have used ideas from human vision to create image-processing algorithms to optimize the image quality for printing or display on a computer monitor.

While current

cameras can focus on objects and judge illumination levels, historically

they have not been used for image analysis, Wandell says. Their forte --

image capture -- is a "fundamentally different job," he says. The human

brain's forte, however, is image analysis, and Wandell says

future camera designs may borrow from biology to build more intelligence

into cameras. ![]()